By Nina Sangma (GARO)

The place are we, the world’s Indigenous Peoples, going to be within the age of Synthetic Intelligence? What might be our scenario as Synthetic Intelligence turns into an on a regular basis actuality for these of us, the “othered,” who’ve been unnoticed of rooms and histories and reminiscences? Traditionally oppressed and politically disenfranchised, Indigenous Peoples have served as take a look at topics for hundreds of years of systemic silencing and dehumanization.

The human situation right now is centered on and propelled by knowledge in an limitless continuum of consuming it, harvesting it, dwelling it, and producing it via loop after loop of digital footprints on platforms meant to compete with sleep. For Indigenous Peoples, our identities are predestined and politicized, outlined by our resistance as our existence via the ages and phases of colonization and imperialism to the current, the “age of AI,” which can exacerbate present inequalities and violence.

Many people have begun to unravel the extent to which AI will decide the human situation and have arrived on the inconvenient reality that AI is a bridge to unspeakable horrors if its governance doesn’t prioritize the lived realities of these on the margins. Improvement per se has at all times been a unclean phrase to us, the Indigenous, who’ve borne the burdens of colonizer States’ so-called “developments” on our ancestral lands. Given their information, we will hardly anticipate States and companies to control AI via governance that’s aimed toward being really moral and centered on the guiding principles of business and human rights.

Left and middle: Nina Sangma (far left) on the Web Governance Discussion board 2024 in Kyoto, Japan.

The stark reality is that the core values that defend human rights are counterintuitive to the values of companies for whom it’s “enterprise as common.” If democracy had been worthwhile, human rights legal professionals and defenders wouldn’t be sitting round multi-stakeholder engagement tables demanding accountability from Huge Tech and creators of AI. The cognitive dissonance is tangible in areas the place the presence of some of us who come from the World South and characterize Indigenous views usually depart these areas feeling like we’ve been patted on the pinnacle, roundly patronized by the captains of business.

A statement launched final 12 months on the existential threats posed by AI that was signed by a whole lot of AI consultants, coverage advisers, local weather activists, and students underlines their nicely based fears that we’re hurtling in direction of a future that will not want people and humanity, and that collective interventions and accountability frameworks are wanted from numerous teams from civil society to make sure sturdy authorities regulation and a world regulatory framework.

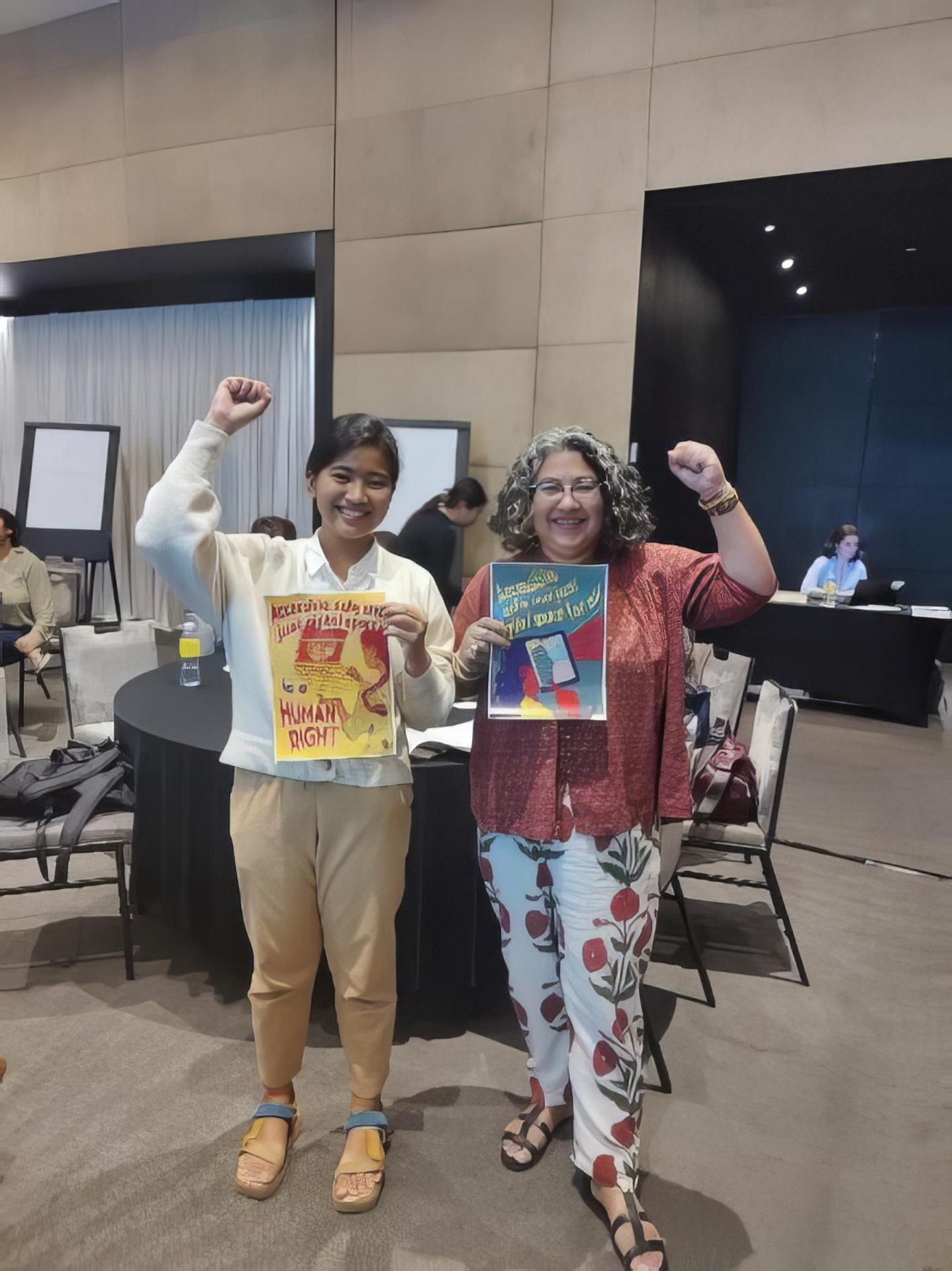

Eloisa Mesina, SecretaryGeneral of Katribu Youth, and Nina Sangma on the Asia-Pacific Convention on Web Freedom in Bangkok, Thailand. All photographs by Nina Sangma.

AI can’t, and mustn’t, be seen in isolation. Previous applied sciences have been the bridge to AI and can proceed the prejudices and biases embedded in present know-how. One of many largest issues is the usage of surveillance tech like Pegasus, which is getting used to subvert democratic rights of residents and free speech, together with the concentrating on of journalists to curb freedom of the Press and residents’ proper to data beneath the guise of nationwide safety. This, coupled with draconian legal guidelines like India’s Armed Forces Particular Powers Act, offers unbridled powers to the Military in so-called “disturbed areas” to take care of the established order. These areas coincide with Indigenous lands the place there’s an Indigenous inhabitants, equivalent to in Northeast India.

Unbiased Indigenous journalist and Fulbright scholar Makepeace Sitlhou reporting from the buffer zone in Manipur, India. Photograph courtesy of Makepeace Sitlhou.

Traditionally, the heavy-handedness of the State has served as a fertile studying floor for testing adoption methods to train management over different areas in India, at all times with the sinister purpose that coincides with and goals on the dispossession of Indigenous Peoples from resource-rich lands to create worth for just a few companies and their beneficiaries. The use and justification of surveillance tech are pinned on creating the necessity for cover from “antinationals” or “terrorists,” phrases generally used to label and libel anybody crucial of the federal government. Each the Duterte and Marcos Jr. governments have continued to place Indigenous activists in danger via the follow of “red tagging” to counsel hyperlinks to communists within the Philippines.

On-line violence usually precedes offline tactics, which led to lethal assaults on 9 Indigenous leaders who had been gunned down in December 2020 for protesting in opposition to the Philippines authorities’s dam tasks. Virtually a decade in the past, in a equally chilling encounter in June 2012, 17 Adivasis falsely accused of being “Maoists” had been killed in extrajudicial killings within the state of Chattisgarh, India, a mineral-rich zone that has attracted mining firms and led to the pressured eviction of hundreds of Adivasis. Most lately, the usage of facial recognition and drone know-how is being adopted within the northeastern Indian state of Manipur to quell the continuing civil unrest within the state.

Nina Sangma (far left) with Pyrou Chung, Director of Open Improvement Initiative, East West Administration Institute and impartial Adivasi journalist and poet Jacinta Kerketta at RightsCon 2023 in Costa Rica.

The query of whom and the way the federal government identifies as an individual of curiosity will rely upon who applied sciences mark as suspicious. What present databases will it faucet into, and the way are these databases outfitted to accurately profile residents, given the inherent flaws in sample recognition and algorithmic biases in its coaching units? Are there not some identities extra prone to be marked suspicious primarily based on their political histories, religions, and information of resistance to State oppression? And in whose favor will these applied sciences be deployed to justify occupation and win wars and elections? In digitized political economies, the incentives to make use of know-how for good to prioritize the welfare of residents are low when in comparison with wealth creation for just a few that depend on sustaining the established order via ongoing methods of oppression.

The function of disinformation campaigns in profitable elections is an final result all governments are conscious of on each the ruling and opposition sides. We’re in a conflict of narratives the place the battleground is on-line, on social media and messaging apps, within the every day propaganda of company media and the hate speech concentrating on minorities, students, and critics, and the subversion of historical past with nationalistic texts and takeover of public establishments. AI will definitely show to be a cheap approach to unfold hate speech and disinformation when it comes to not simply efficacy, however counting on present portions of hate speech and distortion of info to churn out huge quantities of unverified data.

What which means is that we, the customers, are eternally doomed to be caught in a content material verification and content material suggestion loop primarily based on what we eat on-line, resulting in extra polarization than ever earlier than. An particularly ugly thought is the commercial ranges of technology-facilitated, gender-based violence that might be unleashed. Suppose revenge porn utilizing AI-generated deep fakes, the constructing blocks of which lie in present disinformation campaigns focused in opposition to girls to undermine their skilled credibility and reputations. On-line hate in direction of girls is nearly at all times of a sexually violent nature. Merely expressing an opinion as a girl is sufficient to warrant harassment and a slew of vile abuses from rape threats to requires homicide and deep fakes, the articulation of which might be gut-wrenchingly modern with generative AI instruments like ChatGPT and Dall E.

Within the UNESCO report “The Chilling: World Developments in On-line Violence in opposition to Girls Journalists,” a staggering 86 p.c of Indigenous girls journalists reported experiencing on-line violence. The storm of technology-facilitated, gender-based violence spikes throughout battle and for girls journalists reporting from battle areas.

A ugly mirror of ground realities the place girls’s our bodies grow to be a battleground and rape is a political device is being witnessed within the ongoing ethnic violence in Manipur. The development of heightened assaults by trolls is one thing Indigenous journalist Makepeace Sitlhou (Kuki) has skilled firsthand on Twitter because of her award-winning floor reportage from unstable areas. Unfazed by these on-line assaults, issues took a troubling flip when Sitlhou was named in a First Data Report for allegedly “. . . deceptive statements and to destabilize a democratically elected authorities, disturb communal concord by creating enmity amongst communities, and to defame the state authorities and one specific group.”

When requested if the likelihood of on-line assaults paving the best way for offline violence was excessive and whether or not deep fakes might be used as a web-based tactic, Sithlou agreed, saying, “The concerted trolling led as much as an precise police case filed in opposition to me over my tweets.” When requested if this might imply that the usage of AI-generated deep fakes was a risk, she responded, “The way in which it’s occurring to different journalists, it’s extra seemingly an inevitability.”

In a exceptional flip of occasions, the Supreme Court docket held prison proceedings in opposition to Sithlou after Kapil Sibal, a high-profile advocate, appeared for her. Her scenario underlines the necessity for entry to authorized assist, a privilege not many Indigenous journalists have. Sitlhou commented, “I used to be fortunate to have easy accessibility to good Supreme Court docket legal professionals as a result of I’m nicely linked to people in Delhi. However for extra grassroots journalists, they should be recurrently in touch with their editors and journalists’ unions and maintain them posted on the threats that they’re receiving.”

Mia Magdalena Fokno at BENECO protest within the Philippines. Images courtesy of Mia Magdalena Fokno.

One other story of private conquer systemic injustice and the significance of pursuing litigation is of Indigenous journalist and fact-checker Mia Magadelna Fokno (Kankanaey), who gained a cyber misogyny case in opposition to a troll within the Philippines. She underscored the necessity for constructing protected areas on-line and entry to authorized support, saying, “Entry to protected areas and authorized providers is essential for Indigenous girls, particularly feminine journalists, who face on-line assaults. The court docket victory in opposition to gender-based on-line sexual harassment is a big triumph for girls’s rights. This ruling sends a robust message in opposition to the usage of know-how to terrorize and intimidate victims via threats, undesirable sexual feedback, and the invasion of privateness. This landmark case . . . underscores the significance of authorized recourse in combating on-line harassment. This victory is a step in direction of creating safer on-line and bodily environments for all, significantly for Indigenous girls and feminine journalists, who are sometimes focused. Let this case encourage those that have suffered sexual harassment to hunt justice and assist one another in fostering protected areas for everybody.”

Testimonies and firsthand accounts from Indigenous reporters are crucial for difficult mainstream discourse and its dehumanizing narrative slants in opposition to Indigenous Peoples, which has set the stage for cultural and materials genocides—one thing Indigenous Peoples are all too aware of, having been decreased to tropes to swimsuit insidious propaganda for hundreds of years. Expertise within the palms of overly represented communities disseminates these dangerous narratives and metaphors at alarming scale and pace, significantly in Indigenous territories, which are sometimes closely militarized zones.

Mia Magdalena Fokno after submitting a case in opposition to her troll, Renan Padawi.

“As a fact-checker primarily based in Baguio Metropolis, Philippines, I acknowledge the evolving panorama of journalism within the age of AI,” Fokno stated. The emergence of subtle AI instruments has amplified the unfold of disinformation, presenting distinctive challenges that require specialised expertise to deal with. Journalists, particularly these engaged with Indigenous communities and human rights points, want focused coaching to discern and fight AI-generated misinformation successfully. This coaching is important to uphold the integrity of data, a cornerstone of our democracy and cultural preservation, significantly in areas like northern Luzon the place native narratives and Indigenous voices are pivotal. The necessity for fact-checkers to adapt and evolve with these technological developments is extra essential than ever.”

A deeper dive into giant language fashions reveals the fallacy of Huge Tech and AI. Giant language fashions (machine studying fashions that may comprehend and generate human language textual content) are designed not solely to reply to lingua franca, but additionally probably the most mainstreamed beliefs, biases, and cultures, leaving out anybody who just isn’t adequately represented in these knowledge units, and can subsequently be consultant solely of those that have entry to social, financial, cultural capital, and digital literacy and presence.

A picture I posted on Fb lately of Taylor Swift standing subsequent to somebody carrying a Nazi swastika image on his t-shirt was taken down as a result of it went in opposition to group requirements and resulted in profile restrictions. On requesting a overview of the picture, Fb despatched me a notification stating that I might attraction its determination to the Oversight Board—a gaggle of consultants enlisted to construct transparency in how content material is moderated. However how possible is that, given the sheer quantity of instances generated every day? And extra importantly, which sorts of instances among the many hundreds, if not thousands and thousands, which are flagged make it to the ultimate overview desk? If the bots can’t differentiate the issue on the supply stage or learn the intent behind sharing a problematic picture, can or not it’s anticipated to suppose critically as so many consider AI will have the ability to do?

Given this present lack of nuance, AI will, in a nutshell, perpetuate western or neocolonial requirements of magnificence and bias with restricted localized context. Lacking knowledge means lacking folks. With ChatGPT, for instance, there’s a actual risk of loss in translation between what’s prompted by the person and what’s understood by machine studying and the outcomes it generates. Mental theft of Indigenous artwork, motifs, and strategies to the appropriation of Indigenous tradition and identification by mainstream communities signifies that e-commerce web sites and excessive style shops might be replete with stolen Indigenous data.

Tufan Chakma together with his artwork and household. Courtesy of Tufan Chakma.

With the arrival of Dall E, which might create artwork from textual content prompts, Indigenous artists like Tufan Chakma are much more susceptible to copyright infringement, one thing he’s no stranger to. “I confronted it too many instances,” he stated. “The motion I took in opposition to it was to write down a Fb put up. I additionally reached out to those that had used my art work with out my permission.” The one safety he will get is within the type of his on-line followers flagging misuse of his art work. He added that plagiarism occurs with digital art work as a result of it’s a profitable enterprise to transform into industrial merchandise like t-shirts and different merchandise.

Artwork by Tufan Chakma. Courtesy of Tufan Chakma.

Other than the loss in potential income, the deeper influence of plagiarism on the artist, in response to Chakma, is that “Plagiarism can demotivate anybody who’s creating artwork to interact with society critically.” Chakma’s art work is political and raises consciousness via inventive expression of the plight of Bangladesh’s Indigenous communities. Can AI generate artwork that speaks reality to energy within the method of an artist who, like Chakma, seeks to vary the world for the higher? Will AI have the ability to perceive energy dynamics and generate artwork that sees historic injustice and prejudice?

Indigenous identification is encoded in our clothes woven with motifs rife with which means, intrinsic to our ethical codes, core values, and our relationship with nature and the cosmos, a tangible hyperlink to our ancestries. Initiatives just like the IKDS framework and the Indigenous Navigator acknowledge the necessity for Indigenous Peoples to personal and handle the info we accumulate and reinforce us as its rightful house owners to control ourselves, our lands, territories, and sources. Rojieka Mahin, PACOS Belief’s Coordinator for the Native and Worldwide Relations Unit, stated “Recognizing the influence of [Indigenous Knowledge Data Sovereignty] on all Indigenous Peoples, significantly Indigenous girls and youth, is important. IKDS embodies a dedication to studying, inclusivity, and improved governance of Indigenous data and knowledge sovereignty. This strategy is not going to solely maintain our group knowledgeable but additionally empower them to share their experiences with others, underlining the significance of building group protocols inside their villages. Moreover, it can improve the group’s appreciation for his or her Indigenous data, particularly in the case of passing it all the way down to the youthful technology.”

Finally, Huge Tech is colonization in fancy code. Smartphones, good houses, good cities—the main focus is on effectivity and productiveness. However within the rush to do extra, we’re at risk of shedding our humanity. The truth is, that we’ll quickly be outsmarted out of existence by AI if we aren’t vigilant.

Nina Sangma (Garo) is an Indigenous rights advocate and Communications Program Coordinator on the Asia Indigenous Peoples Pact.